BOND-Artificial Intelligence#2 | 매거진에 참여하세요

BOND-Artificial Intelligence#2

#model #BOND #Part2 #developer #ecosystem #technology

#2. The Evolution of AI: Models, Performance, Cost, and Developer Ecosystem

Based on insights from BOND's 2025 AI Trend Report

In just a few years, AI has grown from a niche technology into a general-purpose engine reshaping industries.

At the core of this transformation are increasingly powerful models, cheaper training costs, smarter algorithms, and a thriving ecosystem of developers.

From GPT-1 to GPT-4, we’ve seen more than just bigger numbers—we’ve seen a shift in how AI is built, delivered, and used. In this post,

we’ll explore four key dimensions of AI’s technical evolution: scale, efficiency, data, and community.

1. Model Scale and Performance

Massive Growth in Parameters

One of the clearest trends in AI is the exponential growth in model parameters.

GPT-1 had around 150 million parameters, while GPT-4 is estimated to have over 1 trillion.

This growth has enabled models to understand more nuanced patterns, respond more accurately, and generate more coherent outputs.

According to the BOND report, GPT-4 is 5x more efficient in training than its predecessors, and its inference speed has also improved significantly.

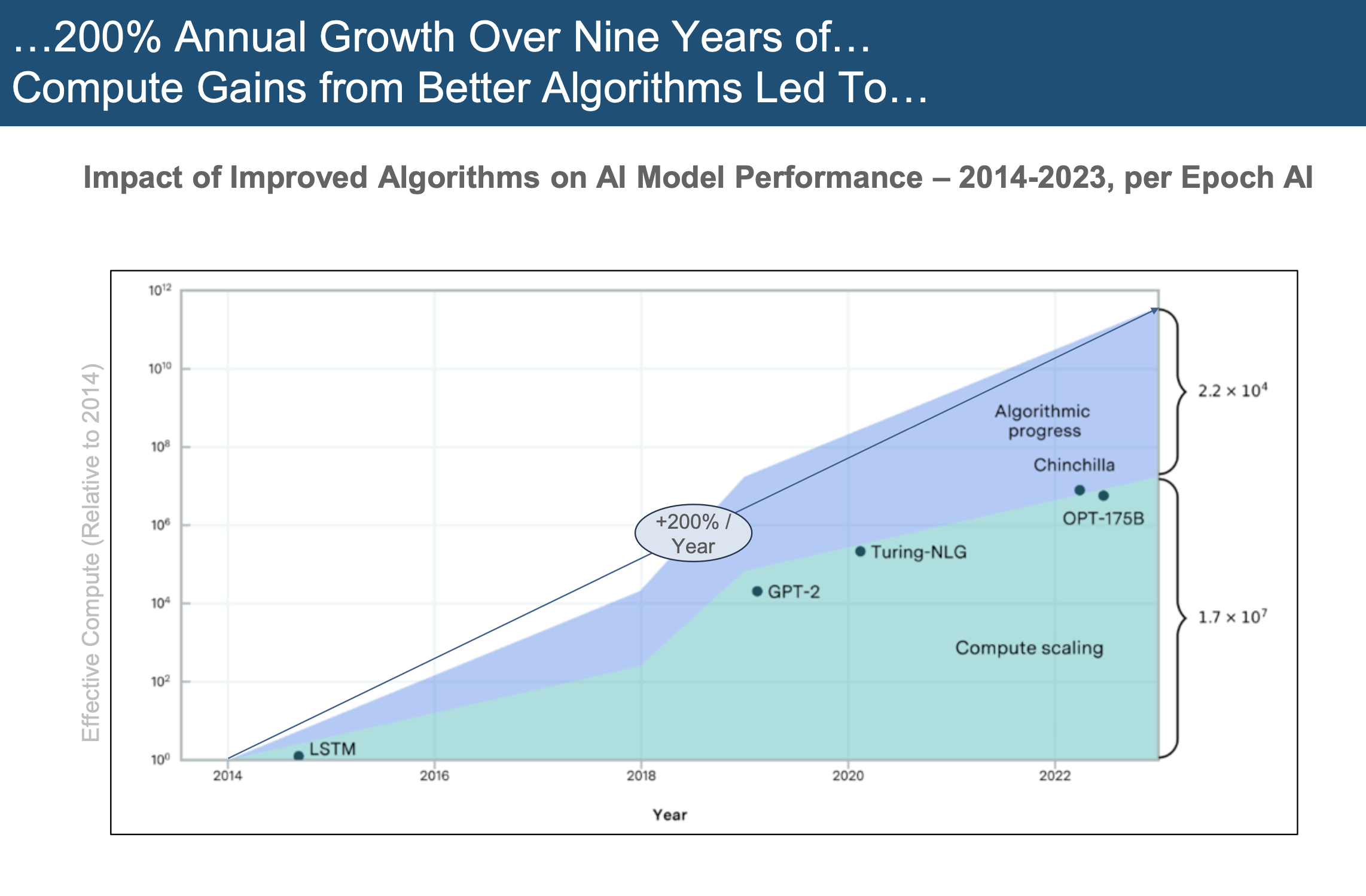

Compute and Algorithmic Efficiency

From GPT-1 to GPT-4, compute usage (measured in FLOPs) has grown approximately 3.6x.

But it’s not just about brute force—the algorithms themselves have improved.

GPT-4, for instance, can deliver higher accuracy with fewer training examples compared to earlier models.

As BOND notes, smarter training techniques are allowing AI systems to learn more while using less.

The Role of Data in AI’s Evolution

1. Bigger and Better Datasets

The evolution of datasets has played a critical role in powering smarter models. Early models were trained on sources like ImageNet, but modern giants like Pile and RefinedWeb provide diverse, large-scale content that supports multimodal capabilities.

Models like GPT-4V and Gemini can now handle text, images, and audio—expanding their usefulness across countless industries.

2. Data Quality Matters

Quantity alone isn’t enough. The quality of data—and how it’s labeled and refined—has become just as important.

Human preference data and techniques like RLHF (Reinforcement Learning from Human Feedback) have drastically improved model intuition and alignment.

The BOND report emphasizes how these methods contribute to more human-like responses and safer outputs.

Cost Dynamics and AI Economics

1. Falling Training Costs

AI models may be getting bigger, but they’re also becoming more affordable to train. GPT-4, despite being more powerful, was trained at significantly lower cost than GPT-3—thanks to advances in:

Hardware: Transitioning from NVIDIA A100 to H100 chips

Data center efficiency: Better cooling, optimization, and scalability

This cost decline has also impacted inference, making it feasible for more businesses to deploy AI products at scale.

2. Commercialization Becomes Viable

As costs drop, the economics of AI shift. What was once R&D-heavy and inaccessible is now becoming commercially viable for startups, enterprises, and developers alike.

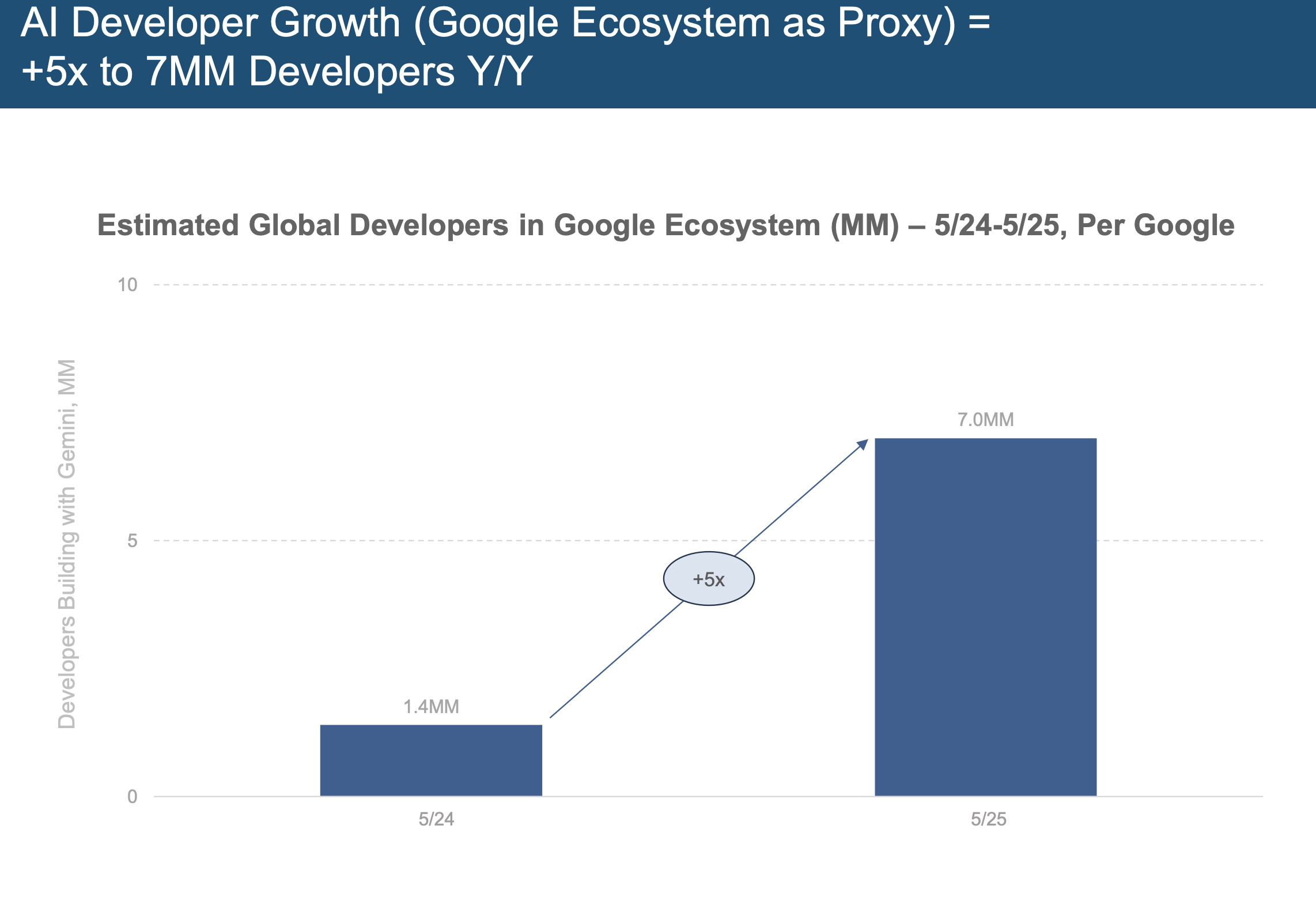

The Developer Ecosystem: Bigger, Faster, More Collaborative

1. Tools That Empower

The AI boom wouldn’t be possible without the ecosystem of tools and platforms that support it. Open-source frameworks like:

Hugging Face

TensorFlow

PyTorch

have enabled rapid collaboration and experimentation. Developers today can fine-tune powerful models without needing massive compute clusters.

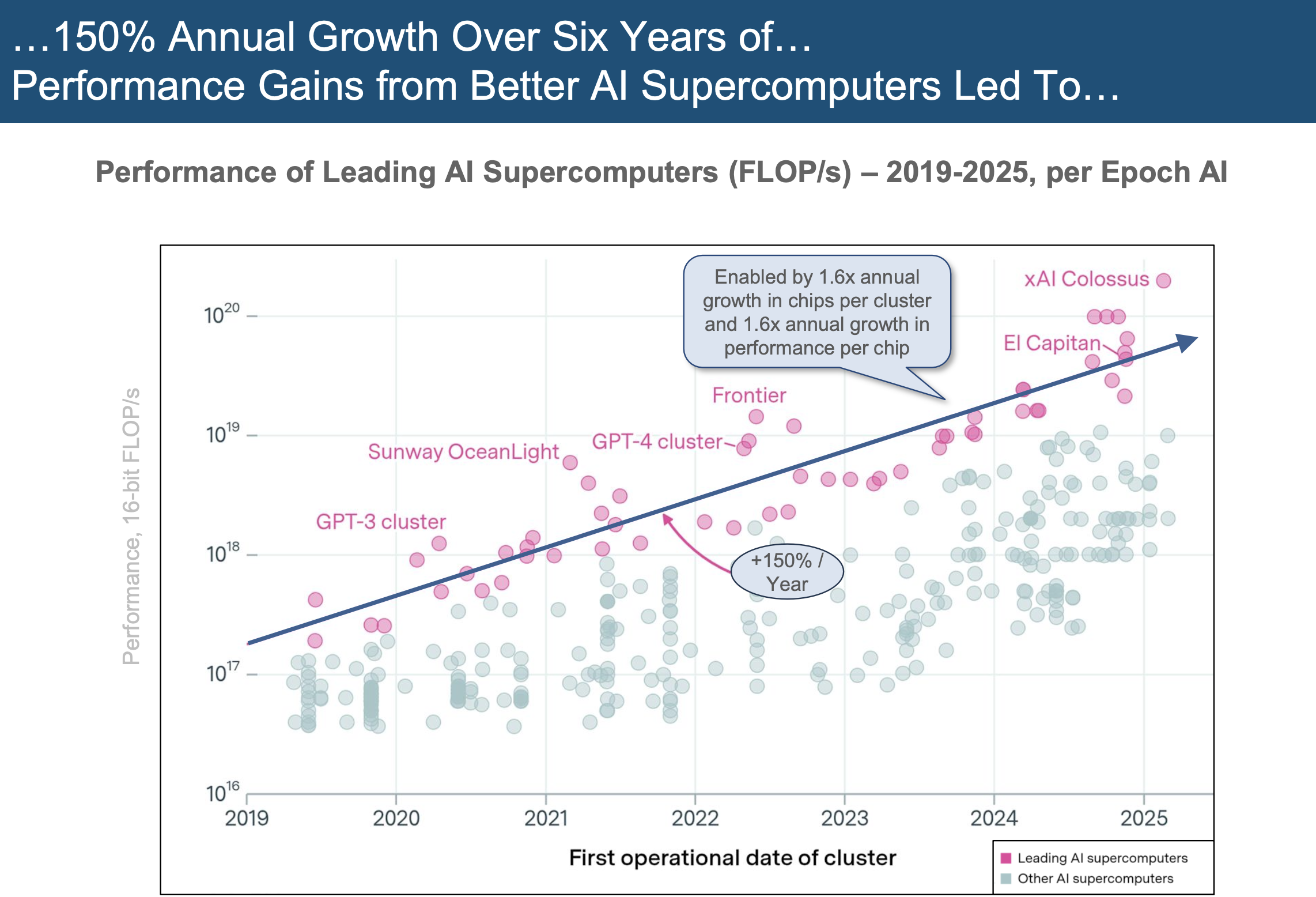

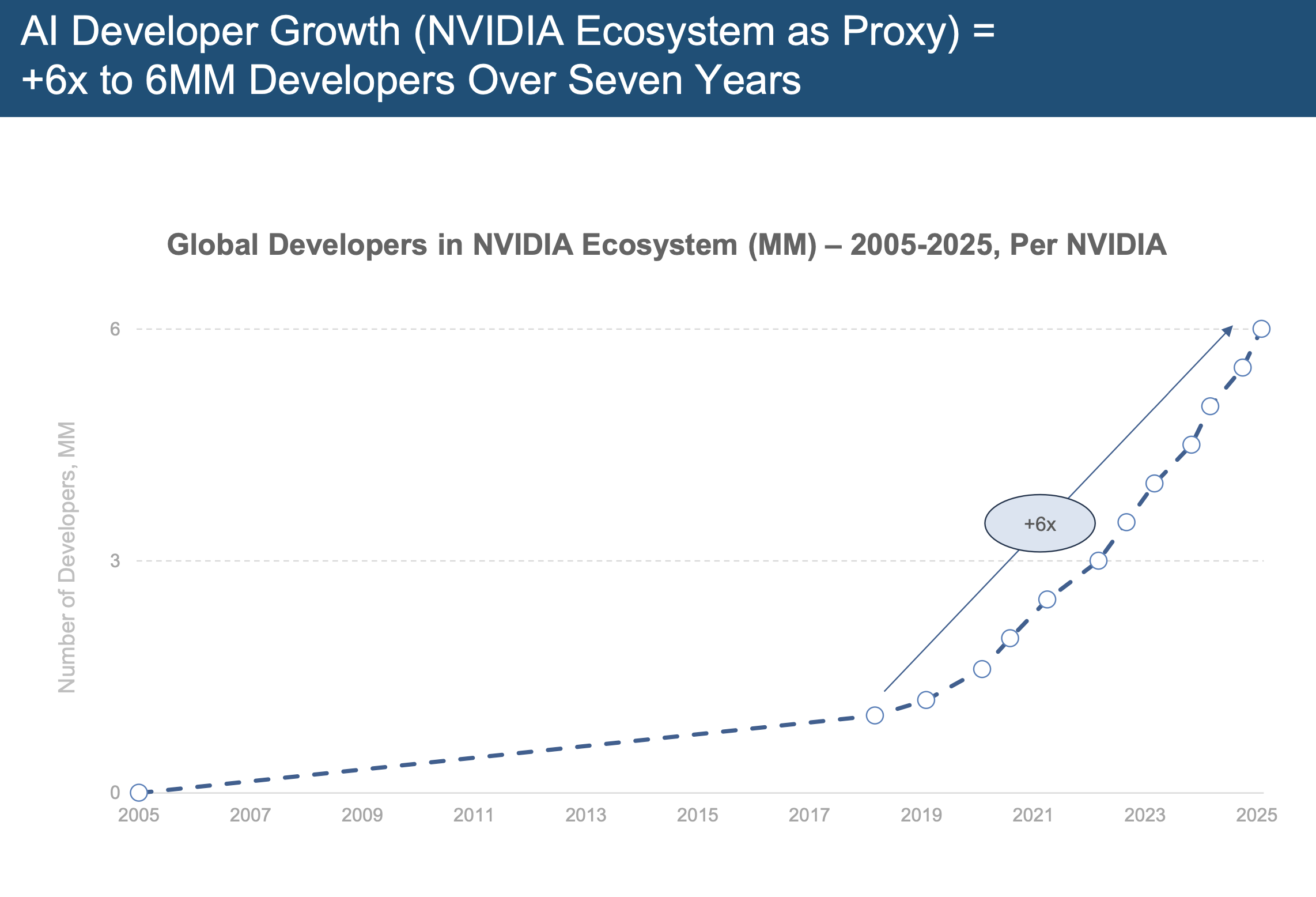

2. Hardware–Software Co-Evolution

Companies like NVIDIA are integrating hardware and software innovation to drive the next generation of AI capabilities. This synergy is transforming the AI stack—from model development to deployment.

As a result, the AI ecosystem is becoming more open, collaborative, and diverse than ever before.

Final Thoughts: Preparing for the Next Leap

The evolution of AI isn’t just a technical story—it’s a societal and economic transformation.

With better performance, lower costs, more data, and a growing community, AI is poised to become the foundational layer of modern innovation.

For developers, business leaders, and policymakers, the time to engage is now.

To lead in the AI-driven world, we need more than adoption—we need deep understanding, experimentation, and constant adaptation.