The Rise of Offline AI - Logic | 매거진에 참여하세요

The Rise of Offline AI - Logic

#OnDeviceAI #sLLM #LoRA #Phi3 #AIPrivacy #EdgeAI

How Local LLMs Work Without the Internet

For years, we’ve repeated the mantra:

“AI lives in the cloud.”

But that’s no longer the whole truth.

As of 2025, AI is going local.

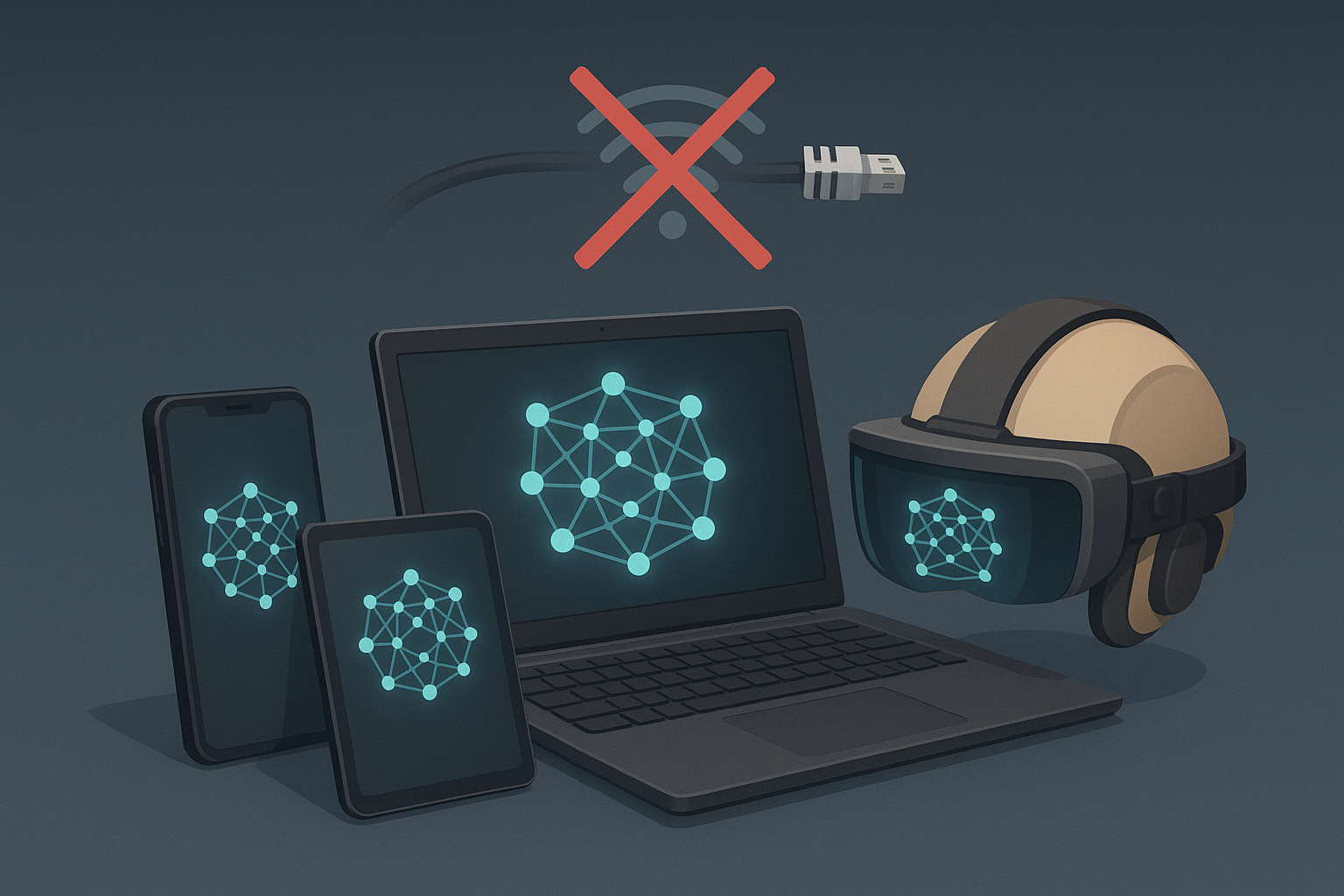

Thanks to rapid advances in compression, optimization, and lightweight architectures, we’re now witnessing the dawn of LLMs that run entirely offline—on laptops, Raspberry Pi devices, and even AR helmets.

Welcome to the era of Local LLMs—intelligent systems that don’t need the internet to reason, summarize, or write code.

Why Local LLMs Matter

So why is everyone talking about AI that runs without the cloud?

1. Unstable or No Network? No Problem.

In remote areas, disaster zones, military sites, or traditional manufacturing plants, internet access is often unreliable—or nonexistent.

Cloud-based AI simply fails in these environments. Local LLMs don’t.

2. Privacy by Default

Hospitals, banks, and R&D labs often handle sensitive data that can’t leave the premises.

With local inference, AI runs inside a closed network—no API calls, no external transmissions.

3. Speed and Cost Efficiency

Cloud APIs come with both latency and a bill. Local LLMs, on the other hand, are:

- Free

- Near-zero latency

- Unlimited in usage

For many, this trade-off is a no-brainer.

Under the Hood: How Local AI Actually Works

The key to this shift? Compression and runtime optimization. Here's a breakdown of the core tech stack:

Technology | What It Does | Example Use |

|---|---|---|

LoRA | Injects low-rank adapters into models without full fine-tuning | Alpaca, LLaMA-Adapter |

QLoRA | Combines quantization + LoRA for ultra-lightweight fine-tuning | Guanaco |

GGUF | Enables model execution on CPU-only environments | llama.cpp |

Phi-3 Mini | Microsoft’s ultra-small, high-performance model | Runs on Surface or Raspberry Pi |

These aren’t just toy models. They deliver real-world performance while fitting on-device memory constraints.

Real-World Examples

Let’s look at how Local LLMs are already being used today:

Groq + Phi-3

Groq’s LPU (Language Processing Unit) powers ultra-fast inference without the cloud. Microsoft has deployed this with Phi-3 on the Surface Pro to create an offline personal AI assistant.

LLaMA 3 on Raspberry Pi

Open-source communities have optimized LLaMA 3 models into GGUF format to run on devices like the Raspberry Pi. In areas like Nepal and Mongolia, these are being used for AI-powered education—without internet.

Smart Helmets with Embedded LLMs

In construction and military applications, AR helmets embedded with offline LLMs offer instant guidance on equipment, emergency protocols, and repairs.

But What About Learning?

A common misunderstanding is that running a Local LLM means the model is “learning from you.”

In reality, here's how it works:

1. Pretraining

Heavy lifting is done by AI labs (OpenAI, Meta, Google, etc.) using massive GPU clusters. These models are trained on public data sources like books, code, and Wikipedia.

2. Quantization + Conversion

Using tools like llama.cpp, models are compressed and converted into formats like GGUF or ONNX to run locally—without GPUs.

3. Local Inference

Once deployed, the model executes—but doesn’t update itself. It answers your questions using fixed, pre-trained weights.

So… does it ever actually learn?

no, your local LLM isn’t evolving on its own. But you can train it manually for specific tasks.

Feature | Description | Learning? |

|---|---|---|

Context Memory | Remembers recent prompts in the same session | 🟡 Temporary only |

Vector Memory | Stores embeddings in local vector DBs (e.g. ChromaDB, Pinecone) | 🟡 Not true learning |

LoRA Fine-tuning | Manually trains with custom data using Colab/RunPod | ✅ But requires setup |

What’s Next? Local Agents

The future isn’t just local LLMs—it’s local AI agents.

These will:

Remember your workflow across devices

Offer personalized suggestions without Wi-Fi

Respect your data privacy by design

Imagine an AI that knows your habits, projects, and preferences—without ever calling home.

It’s no longer about “offline ChatGPT.”

It’s about owning a true on-device assistant.

Final Thoughts: “AI Without Internet Is Here”

We’ve entered a new phase in AI democratization.

The freedom to run smart models without cloud dependency isn’t just a technical shift—it’s a social one.

It brings autonomy, security, and privacy back into the hands of individuals.

Whether it’s on your desk, in your pocket, or embedded in your AR headset—AI is finally becoming yours.